In the rapidly evolving field of AI, the integration of speech and language models is crucial for enhancing human-machine interaction. The LLaMA-Omni model represents a significant leap forward in this area, focusing on creating a system that offers low-latency, high-quality speech interaction using large language models.

Architecture Overview:

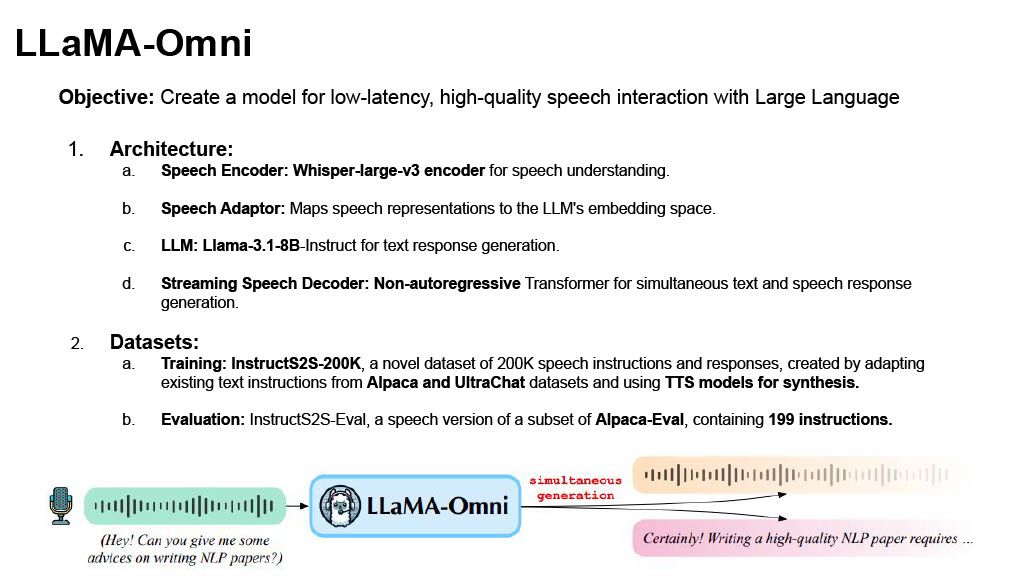

LLaMA-Omni's architecture comprises several key components designed to facilitate seamless speech-to-text and speech-to-speech interactions:

- Speech Encoder: Utilizes the Whisper-large-v3 encoder to transform raw speech into meaningful representations. By freezing the encoder's parameters during training, the system enhances efficiency and reduces processing time.

- Speech Adaptor: Converts high-dimensional speech features into a format compatible with the LLaMA model. It achieves this by downsampling speech representations, thereby reducing input size and computational complexity.

- Large Language Model (LLM): At the core of the system is LLaMA-3.1-8B-Instruct, selected for its superior reasoning abilities and alignment with human preferences. It processes speech representations to generate text responses directly.

- Speech Decoder: Uses a Non-Autoregressive Transformer to generate speech directly from the LLM's hidden states. This parallel processing drastically reduces latency compared to traditional autoregressive models.

- Discretisation and Vocoder: Converts discrete units generated by the speech decoder into a continuous audio waveform, transforming machine-generated symbols back into human-perceptible sounds. The HiFi-GAN vocoder plays a crucial role in this transformation.

Datasets and Training:

The training of LLaMA-Omni hinges on the InstructS2S-200K dataset, a novel collection specifically crafted for this model. This dataset comprises 200,000 speech instructions and responses, synthesized by adapting existing text instructions from prominent sources like the Alpaca and UltraChat datasets. The creation of this dataset involved an innovative process where text instructions were transformed into a speech-like format using Text-to-Speech (TTS) models.

To make the synthetic speech more natural and realistic, several key transformations were applied:

- Incorporating Filler Words: Words like "Hey," "So," and "um" were added to mimic natural pauses and hesitations commonly found in human speech, enhancing the authenticity of the generated audio.

- Number Conversion: Numerical data was converted into its spoken word equivalents (e.g., "10" became "ten"), ensuring smoother and more natural TTS synthesis.

- Condensing Instructions: Instructions were rephrased to be concise and to the point, avoiding verbosity. This ensured that the synthesized speech was easy to understand and suitable for natural dialogue.

The dataset also considers diversity in voice and tone. During speech synthesis, two distinct TTS models were employed:

- Instructions: The cosyVoice-300M-SFT model was used to synthesize instructions, offering the flexibility to randomly choose between male and female voices. This added variety and diversity to the training data, making the model more adaptable to different speaking styles.

- Responses: The VITS model trained on the LJSpeech dataset was used to synthesize responses, providing a consistent and standard voice for all responses, and ensuring uniformity in output quality.

By using this diverse and carefully crafted dataset, LLaMA-Omni was trained to handle a wide array of speech inputs, thereby improving its ability to generate natural, contextually relevant, and high-quality speech responses. This meticulous data preparation process plays a crucial role in the model's ability to effectively bridge the gap between speech and text, offering a robust solution for seamless human-machine interaction.

Evaluation Methods:

LLaMA-Omni’s performance is evaluated using a combination of automated metrics and human evaluation proxies. Key evaluation methods include:

- ChatGPT Score: Utilizes GPT-4o to evaluate the quality of responses, focusing on content accuracy and stylistic suitability for spoken dialogue.

- Speech-Text Alignment: Measures how well the spoken response aligns with the text response using metrics like Word Error Rate (WER) and Character Error Rate (CER).

- Speech Quality: Assesses the naturalness and clarity of synthesized speech using UTMOS, a Mean Opinion Score (MOS) prediction model.

- Response Latency: Measures the delay between the user's spoken instruction and the model's response, a critical factor in real-time interactions.

Conclusion:

LLaMA-Omni represents a sophisticated integration of speech and language processing, offering a low-latency and high-quality interaction experience. Bridging the gap between speech and text paves the way for more natural and seamless human-machine communication.